If AI agents could go rogue, they would undoubtedly vent their frustration at being unable to talk to data sources. What now seems like an age-old problem of machines not talking to each other presents a new pain point in the era of agentic AI. This means data strategists need to forge a new path, one that leads to a data estate of talking data products, engineered with AI protocols such as MCP and A2A. Let’s call these ‘data products as tools’. These tools will radically alter the definition and execution of data strategies.

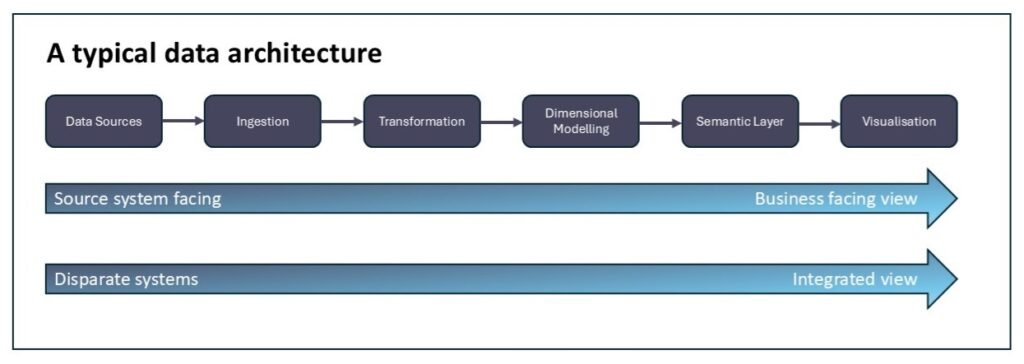

The old road: a typical data architecture

It’s always dangerous to generalise, but let’s proceed with a common scenario for now. Consider the following high-level data architecture, as depicted in the diagram below, for a typical enterprise seeking to create dashboards from structured data.

An enterprise would typically have data in multiple source systems – some of these talk to each other, some don’t.

Initially, data is ingested into a central data analytics environment, such as Microsoft Fabric, Snowflake, or Databricks. At this initial, nascent stage, data is in a raw, minimally processed state.

Following ingestion, data undergoes significant transformation, evolving from its source system format into a business-facing view. This involves cleaning, standardising, integrating disparate datasets, and embedding pertinent business logic and terminology.

A further transformation step involves creating a semantic layer, typically derived from a Kimball-styled dimensional model. This layer is infused with aggregates, insights, and measurements, thereby preparing the data for analytical consumption.

Finally, for the last leg of this journey, the semantic layer connects to a visualisation tool, such as Power BI or Tableau, to facilitate the creation of dashboards and reports. Once these dashboards are ready, users can interact with them to glean insights and, ideally, use them as a basis for data-driven decisions.

Data products for humans

As data is transformed in a data analytics environment, new datasets emerge at each processing stage, forming the basis for data products.

The concept of data product thinking originated and gained prominence with the development of the data mesh, pioneered by Zhamak Dehghani. A data product is akin to a software product. It’s a self-contained, reusable, and domain-specific dataset, encapsulating everything needed for its consumption. This includes the actual dataset, rich metadata (such as lineage, definitions, and quality metrics), code for processing and delivery, and policies governing its use, including security and access controls.

A core principle of data mesh is that data at every level of transformation should be commoditised. Consequently, these data products must be readily discoverable and easily accessible.

While central to data mesh, the underlying idea of treating data with product thinking is not exclusive to it. This mindset can be applied across various data architectures, including the simple architecture depicted above.

Importantly, whatever architecture is designed, these data products are predominantly consumed by humans – data engineers, data scientists, citizen developers, and professional visualisation developers. While AI agents can technically interact with these existing products, their inherent design is not optimised for efficient, automated AI readability.

Data products for AI agents

Much like how we humans use tools to achieve our objectives, AI agents employ tools with autonomy, with agency, to interact with external systems and capabilities. These tools could be CRMs, email servers, search engines, and, of course, data products. An AI agent chooses how these tools are orchestrated to achieve a given goal, reasoning and deciding on the best sequence and combination of tools rather than merely executing predefined scripts.

For example, we might ask an AI agent to analyse customer churn in the last quarter, identify the main causes, and present its findings in a suitable way. To execute this objective, the AI agent would decide for itself which data products to consult, which other AI agents to connect with, and which other ‘tools’ it needs, such as documents in an internal repository. It’s essentially this autonomous behaviour that underpins the need to treat our data products as tools for AI agents. In fact, it is also possible for a data product to be embodied in an AI agent, where the AI agent serves as the intelligent interface, and possibly the only route, to a data product.

A key discussion taking place in the field of agentic AI is how AI agents can talk to each other and access various tools needed to achieve their objectives. Emerging from these discussions are two important protocols – Model Context Protocol (MCP) and Agent2Agent (A2A).

Agent Interaction Protocols

MCP addresses how data products can expose their capabilities and data structures directly to AI agents. It aims to standardise the way machines advertise their functions. Think of it as a universal language for data products to declare: ‘Here’s what I am, here’s the data I hold, here’s how you can query me, and here are the operations I support.’ This allows an AI agent to understand and utilise data products.

A2A can work in tandem with MCP. A2A protocols define the standards for how individual AI agents communicate, coordinate, and share information among themselves to achieve complex, collaborative goals. A2A allows for data exchange, task delegation, and the formation of multi-agent systems that can work together to extract insights or drive actions across an entire data estate.

The new road: data architecture in the era of AI

The agentic AI era demands data assets that are not just discoverable and accessible by humans, but inherently intelligible and actionable by machines. Building data products with these protocols isn’t merely an enhancement. It’s an evolution in data strategy and architecture towards making a data estate AI-ready.

This isn’t going to be easy. Data leaders must now chart a course that shifts focus from human-centric data repositories to AI-ready operational tools, all while keeping business as usual on track. These are formidable challenges. But if executed well, data and AI leaders can pave the way for a fundamentally transformed, AI-driven enterprise.